When we don’t use Splunk for ad-hoc analysis, we built reports, dashboards and alerts with it, frequently for security sensitive applications.

Often, no automated tests are written to check the integrity of these applications.

I think it’s a good idea to change that!

Why bother?

There are two big benefits to automated testing:

- Not getting yelled at

- Faster development

I let you in on a little secret:

Most things I program are not initially bug-free. Shocking, I know!

The real challenge is finding these bugs myself and not letting the customer find them later in production. This is important, because the second case leads to me getting yelled at.

But who has time for this stuff?

Let me admit: Sometimes testing slows you down. If you start with a big bowl of legacy spaghetti-code and you need to retroactively test it all, then it can be excruciatingly slow.

On the other hand, if you don’t test the bowl of spaghetti before cooking more, you will get yelled at later. Probably a lot. This case is a trade-off between saving time and getting yelled at.

Starting with a bowl of spaghetti is always a bad situation: Someone already started off in the wrong way and repairs take time. When you start the right way, testing will make you faster!

This is due to two factors:

- When you develop with the mindset of testing each new functionality, you think about writing code that is easy to test. Easy to test is also easy to build on. Working test-driven leads to better, more modular code!

- Did you ever make a small code-improvement, only to wonder what other part you may have broken? Well, I wonder about that all the time. Then I run my tests and check! When all important functionalities have tests, it is a matter of seconds to see what broke and fix it.

To summarize:

Splunk dashboards don’t count as software development!

Testing is just for proper software development!

I don’t care about semantics. I care about not getting yelled at! And if important stuff depends on the figures in my Splunk dashboard, then I will probably get yelled at if these figures are incorrect. Hence, I think automated testing in Splunk is a splendid idea!

Conversely, if nobody cares enough about your dashboard to yell at you when they brake, then you should save some time and don’t’ build them in the first place. Live is too short for useless dashboards!

Whether Splunking is software development or not: If Splunk is not exempt from murphy’s law, then we must test our work!

What to test manually

Without getting to deep into the theory of testing we can say: Testing GUI-elements is probably best done manually, since testing it automatically involves stuff like browser automation which is a lot of work.

Which tests to automate with Splunk

Splunk imports & indexes large amounts of data.

It then runs searches over this data.

These searches don’t just “search”. They split, apply, combine and aggregate the large amounts of data into smaller, but more interesting pieces of data.

We can easily test this whole process by feeding minimal sets of test-data into the system and then check whether the searches spit out the correct results. This way we check simultaneously:

- Does the data import work correctly?

- Do the searches return the correct results?

OK, you convinced me, but how do I implement this quickly?

I am glad you asked, …

Challenges

- Known system state / have empty system

- External validation

To test anything in any system I need to start from a blank slate, or at least from a known state! If I don’t know what my system was like before the test, I can never be sure what my test did to it. In our case: I need to control the data in the index to validate my search results.

One possibility would be to clone my development instance of Splunk and empty its index before every test. We can then selectively fill the index with only the data for each test.

However, setting up a new Splunk instance may create some overhead. But since we probably don’t want to empty the index of our development instance for testing, we need another solution.

Our solution is, to create a new index specifically for testing and then swap this one for the productive index in each search during testing.

This way we can use one Splunk instance for development and testing!

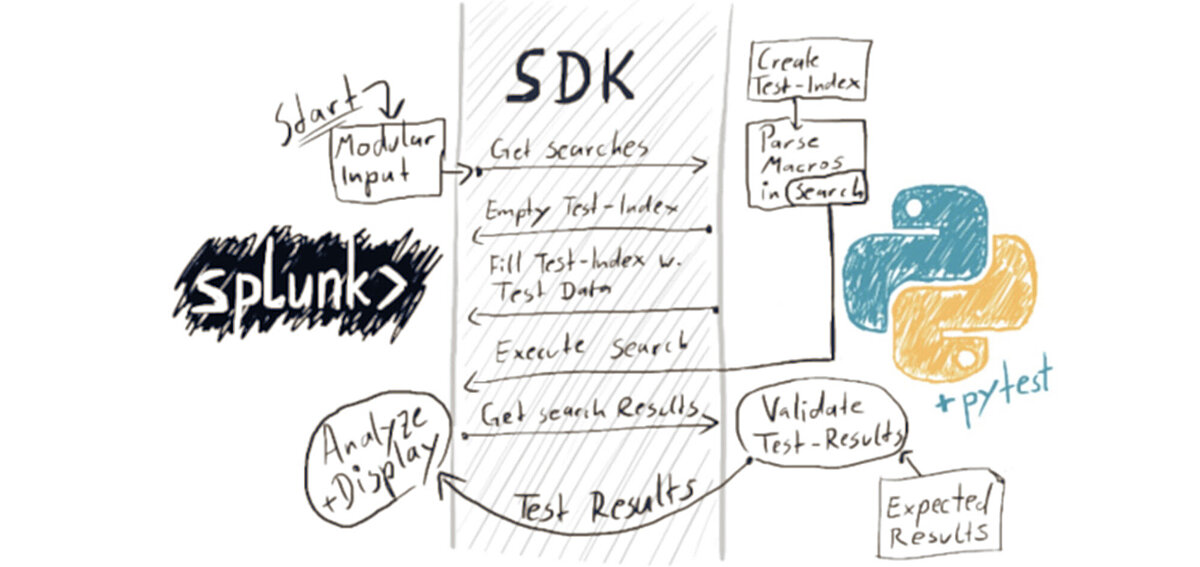

The steps to using pytest with splunk

- Make saved searches out of all searches you want to test

- Think about making repeated parts modular by encapsulating them into macros

- Build data for test cases

- Make them minimal (=small!)

- Check the results via excel

- Connect python to Splunk via Splunk’s’ python-sdk

- Use pytest to write tests for all saved searches

- Get saved searches from Splunk

- Exchange the real index for your test index

- Parse all used macros

- Use the amended search for your tests: It still has the same functionality but now uses the data from your test index.

- Run the tests

- Create new index just for testing

- Clean it before each test

- Inject just the test data to get a known system state

- Manipulate the search to use the new test-index

- Compare results

- Don’t hard-code expected results, rather link to excel

- Turn Splunk into a continuous integration server (optional)

- Run pytest test-suite directly from Splunk by using modular inputs to call python scripts

- Export pytest output back into Splunk (optional)

- Setup reports and alerts for failing tests in Splunk

- Create a dashboard to get test-results at a glance

Management Summary

In the next article, we can look at how exactly to use the Splunk SDK and how to resolve saved macros into searches. We can also convert Splunk into its own continuous integration server for testing. Let me know if you found different solutions to the challenge of testing Splunk apps!

In the meantime: Be save! Don’t forget to test! 😊

Sebastian Rücker

info@advisori.de

Sebastian is ADVISORI’s Data Science Team Lead – he combines expertise in Machine Learning, Software Development, and Design Thinking.